Retailers have always sought to understand the subtle human moments that drive conversion — a glance, hesitation, smile, or a decision not to engage. With Vision-Language Models (VLM), these nuances can now be understood, quantified, and turned into measurable business insights.

What is VLM in Retail?

At its core, VLM is an AI technology that interprets video context the way a human would. It doesn’t just detect that two people are standing together — it understands why they are. Was the staff member offering help? Did the shopper decline politely or engage further? These layers of understanding redefine how we measure human interactions in stores.

Why Retailers Should Care

Traditional analytics can tell you how many people entered, how long they stayed, and where they went. But VLM tells you what really happened. It can reveal:

- When staff approached a shopper and how that interaction went.

- Whether shoppers engaged with a new kiosk or walked away confused.

- How customers reacted to a new product display – intrigued, skeptical, or delighted.

By identifying these nuances, management teams can make better decisions: where to place staff, how to train them, what store layouts work, and which age groups struggle with new self-service technologies.

From Insight to Action

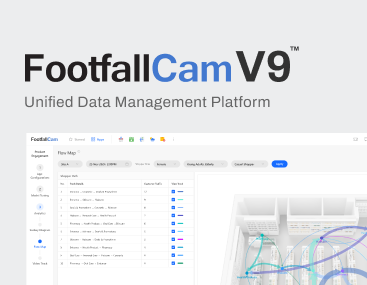

Imagine defining a specific type of customer behaviour – say, interest without purchase or confusion at self-checkout. With VLM, FootfallCam can search through millions of hours of video footage to find every instance of that behaviour and compile it into reliable, statistical metrics. This turns subjective observation into objective, actionable insight.

Practical, Scalable, and Affordable

All of this can be done with minimal setup – often within just a few hours of work. For retailers, that means a fast and affordable way to tap into an entirely new dimension of understanding without the need for complex reconfiguration or new infrastructure.

FootfallCam’s VLM is opening new possibilities for retail analytics, bringing human understanding back into data-driven decisions. It’s no longer about how many people walked in, but what happened when they did.

Speak with us to learn how your existing camera infrastructure can deliver deeper insights than ever before.

#AIinRetail #VisionLanguageModel #RetailAnalytics #ShopperBehaviour #CustomerInsights #DataDrivenRetail #RetailInnovation #FootfallCam #CustomerExperience #SmartRetail