Small Retailer

Vision-Language Analytics

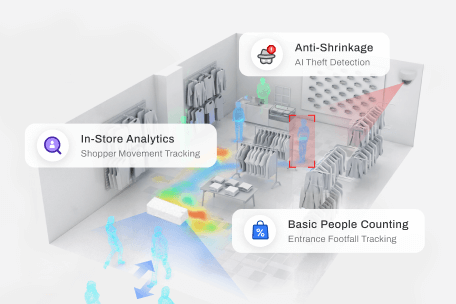

Multimodal vision-language AI for in-store video analysis: identifies shopper behaviour, maps staff-shopper interactions, and monitors SOP adherence through semantic understanding of scenes.

“Vision-Language Analytics for Behaviour Understanding”

Vision-Language Models (VLMs) represent the next advancement in video analytics. Rather than measuring only positional changes or dwell time, VLM enables a system to interpret actions and sequences of behaviour within short video clips. This provides businesses with a higher-order understanding of how customers and staff interact within a space, without increasing operational complexity or compromising privacy.

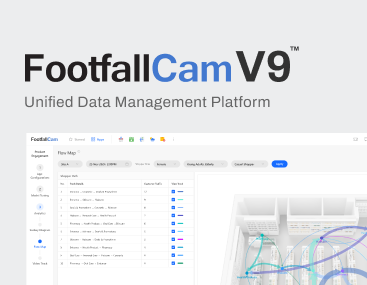

FootfallCam applies VLM in a controlled, domain-specific way. The model operates entirely on-device, analysing brief video segments and converting them into clear, high-level behaviour categories. These categories are designed for aggregation, statistical analysis, and decision-making, not for identifying individuals or generating personal profiles. The output is structured behavioural data that enhances traditional people counting, queue analytics, and operational performance metrics.

The system is built around four design principles:

#1: Behaviour-Level Understanding

The model recognises sequences such as approaching, waiting, browsing, interacting with staff, or receiving assistance. It moves beyond simple coordinates to interpret the intent behind customer actions, providing richer insight into service quality, engagement, and operational flow.

#2: User-Defined Behaviour Categories

Businesses can specify, in plain language, the types of behaviours they want to monitor, for example, “customer waiting for service” or “staff using handheld PoS.” The system classifies activities into these categories, producing structured, high-level statistics tailored to operational goals.

#3: Explainable Classification

Each behavioural classification is accompanied by a short, plain-English explanation describing the cues that led to the decision. This supports transparency, validation, and alignment with internal operational definitions

#4: Guardrailed and Privacy-Centric by Design

The model analyses only the behaviours explicitly requested. It cannot infer identities, personal attributes, or any information outside the defined scope. All processing occurs locally on the device, and only aggregated behaviour counts are transmitted for reporting.

By integrating VLM with traditional footfall and queue analytics, FootfallCam enables organisations to measure aspects of the customer journey that were previously unobservable. This helps validate staffing models, evaluate service responsiveness, optimise mobile PoS deployment, and improve overall visitor experience, all through structured, anonymised behavioural insight.

Ready to learn more?

Copyright © 2002 - 2026 FootfallCam™. All Rights Reserved.

Cookies Notification

We use cookies to ensure that we offer you the best experience on our website. By continuing to use this website, you consent to the use of cookies.

Select Your Language

Please select your prefer language.